Electrification Without Electricity: An Epic Failure in Planning for Critical Infrastructure

Executive Summary

The U.S. economy has been electrifying ever since Thomas Edison built the first electric generating plant in 1882 on Pearl Street in New York City. Two key benefits of using electricity have driven this ongoing change. First, for many applications (e.g., refrigerators versus iceboxes, washing machines versus washboards, incandescent lightbulbs versus candles), electricity provides operationally superior ways to perform a task, compared with a nonelectric process or machine. Second, many more applications (e.g., motors, computers, televisions, MRI machines, lasers) are possible only because of electricity. The unfolding of where, how much, and how fast both phenomena occur has driven the long march of electrification.

Despite this inexorable growth, many federal and state policymakers believe that mandates are needed to electrify almost everything, and rapidly. For example, many states have adopted California’s “Advanced Clean Car” rules, which mandate that by 2035, all new cars and light trucks sold be electric. Building rules, such as New York City’s Local Law 97, which requires all multiunit residential buildings greater than 25,000 square feet to replace existing gas- and oil-fired boilers with electric heat pumps, have also proliferated.

At the same time, many states have enacted legislation to mandate that electric utilities produce electricity solely using zero-emissions resources such as wind and solar power. Some of these mandates will take force as early as 2030.

Regardless of these policies’ putative merits, all share what should be an uncontroversial trait: they require sufficient supplies of power and the supporting infrastructure to deliver it (transmission lines, transformers, neighborhood poles and wires, etc.) to ensure that the electricity required will be reliable and affordable.

Yet, at the behest of regulators and politicians, utility companies are increasingly ignoring this reality and instead focusing on policies that emphasize rationing, primarily through higher prices, but also via restrictions on consumers’ access to electricity. In other words, rather than designing an electric system to meet customers’ requirements, utilities are focused on constraining customers’ access to electricity and trying to accommodate growth in demand mainly by using existing electrical power systems.

Meanwhile, retail electric rates are increasing, and at an increasing pace, even though wholesale electric prices remain low or moderate. In California, for example, between the second quarter of

2020 and the second quarter of 2024, the average residential electricity prices increased 73%, from

19.5 cents/kWh to 33.8 cents/kWh. Over this same four-year period, the average price paid by commercial customers increased 43% and 52% for industrial customers. And that followed price increases that had already taken place from 2010 and 2020 of more than 30% in all categories.

These increases in average prices do not tell the full story of how customers have been adversely affected. California’s major electric utilities (and many others) have instituted time-of-use (TOU) pricing, which charges—i.e., penalizes—consumers with higher rates when electricity demand peaks, in order to encourage consumers to reduce electricity usage. For example, in the summer of 2024, San Diego Gas & Electric charged residential customers 56.1 cents/kWh between the hours of 4 p.m. and 9 p.m. On “Reduce Your Use Event” days, which the company can declare 18 times per year, it charges residential customers $1.16/kWh during these same hours. Running a typical home air conditioner would cost a residential customer over $17 for those five hours. Residential customers of Southern California Edison face even higher peak TOU rates: as much as 75 cents/kWh. These rates are prohibitively expensive for lower-income customers, especially those who live inland where summer temperatures often exceed 100 degrees.

Besides TOU pricing, utilities are introducing direct controls to reduce electricity consumption. These controls enable utilities to remotely prevent the use of electric vehicle chargers from operating at all in the early evening, or to shut off air conditioners or water heaters.

At the same time as all of the above, the overall reliability of electric systems is decreasing. even when major events such as hurricanes or wildfires are excluded. For example, over the last decade, the amount of time California’s Pacific Gas & Electric customers have been blacked out has doubled. Despite unaffordable TOU prices for many consumers and decreasing reliability, a dozen states are following California’s model for electricity planning; more are being urged to do so.

Rather than responding to policy directives requiring consumers to increase their reliance on electricity by ensuring adequate supplies, adequate infrastructure, and affordable prices, utilities are responding—again, often at the insistence of regulators and politicians—by “managing,” that is, by restricting, consumers’ access to the electricity they are being forced to rely on.

The justifications for this approach are embedded in utility planning methods, which focus on “least-cost” strategies to meet growing electricity demand. But least cost is not the same as maximum value. While pursuing efforts to reduce the need for new infrastructure and new supplies, utilities and regulators are ignoring costs borne by consumers: direct costs that punish consumption; and indirect costs that force consumers to adjust their behavior, or that depress economic growth. Ironically, the so-called least-cost approach to utility planning is antithetical to claims by advocates that more electrification is good for the economy, consumers, and the environment.

The eagerness to accelerate electrification is driven by aspirations to reduce carbon dioxide emissions. But by making emissions reductions the monomaniacal focus of utility planning, policymakers are ignoring the sine qua non of utility planning of the last decade, i.e., that electricity needs to be affordable and available when consumers need it. Thus policymakers are impeding society’s ability to capture the value of increasing electrification.

If policymakers want to meet the dual goals of greater electrification and reducing growth in carbon dioxide emissions, the rational policy framework would be to end mandatory electrification efforts; to end punishing electric pricing regimes; to end policies that restrict consumers’ ability to use electricity when they want it; and to end subsidies and mandates for intermittent wind and solar power, which are destabilizing electric grids. Instead, the use of natural gas and nuclear power should be emphasized to minimize carbon dioxide emissions, which will do so at a far lower cost.

Introduction

The ongoing electrification of the U.S. economy over the past 140 years has been driven by two key benefits of using kilowatt-hours to power machines or processes: in many applications, electricity offers operationally superior, and thus more economical, ways to perform a task, compared with a nonelectric process or machine; and in many more applications, the device or process is possible only with the use of electricity. The unfolding of where, how much, and how fast both phenomena occur has driven the long march of electrification.

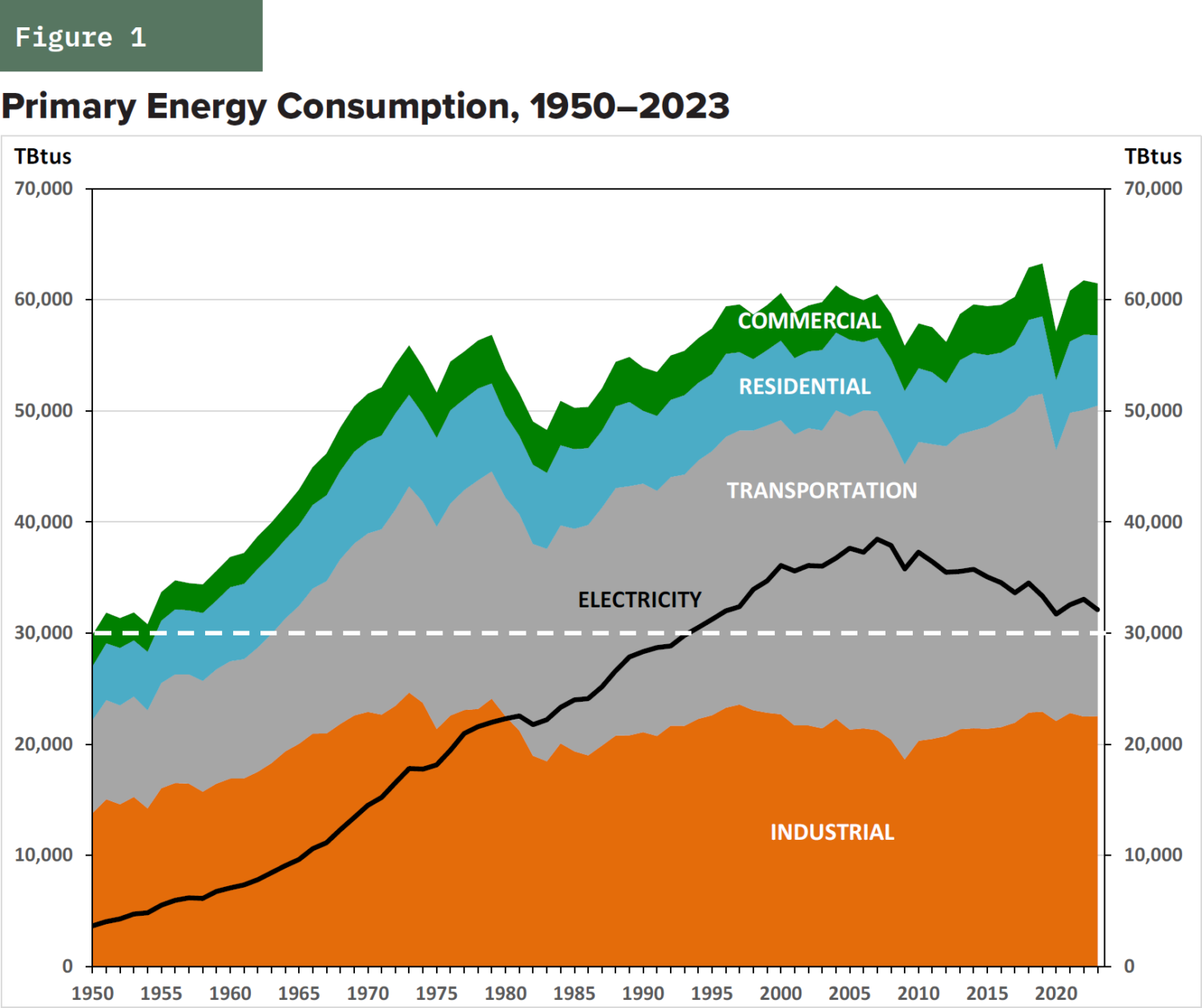

Between 1920 and 2023, net electricity consumption increased 80-fold, from about 50 terawatt-hours (TWh) in 1920 to about 4,000 TWh in 2023.[1]U.S. Census Bureau, Historical Statistics of the United States, Colonial Times to 1970, chapter S; U.S. Energy Information Administration (EIA), Electric Power Monthly, table 5.1. Over that same period, total primary energy consumption increased about fourfold, from about 15 trillion Btus (TBtus) to over 60 TBtus.[2]Institute for Global Sustainability, Boston University, “United States Electricity History in Four Charts,” Feb. 21, 2023. (One British thermal unit [Btu] is defined as the amount of energy needed to raise the temperature of one pound of water by one degree Fahrenheit.)

Primary energy (the total amount of energy input) used

to produce electricity peaked in 2007, at which point the energy consumed for electric power generation was greater than what the entire nation used for all other purposes in 1950 (Figure 1).

Despite the increasing reliance on electricity, many federal and state policymakers believe that mandates are needed to more rapidly electrify nearly everything. For example, many states have adopted California’s “Advanced Clean Car” rules, which mandate that by

2035, all new cars and light trucks sold be electric. At the same time, numerous states have enacted legislation to mandate how electric utilities produce electricity in an attempt to ensure the use of sources that entail zero carbon dioxide emissions; some of these mandates will take force as soon as 2030.[3]Clean Energy States Alliance, Table of 100% Clean Energy States, undated.

At the federal level, the U.S. Environmental Protection Agency has adopted mileage standards and emissions limits that, by 2032, will force more than half the new vehicles sold in the U.S. to be electric.[4]Ivette Rivera and Michael Harrington, “EPA’s De Facto Electric Vehicle Mandate: Too Far, Too Fast,” NADA (NationalAutomobile Dealers Association), Sept. 30, 2024. There are also ongoing efforts at the state and federal levels to encourage or require homeowners and businesses to switch out fossil-fuel space- and water-heating systems and replace them with electric heat pumps. Some states, including New York and Washington, require all new residential and commercial buildings to be fully electric.[5]Rachel Ramirez and Ella Nielsen, “New York Becomes the First State to Ban Natural Gas Stoves and Furnaces in Most New Buildings,” CNN, May 3, 2023. Washington State’s building code does not explicitly ban gas appliances but imposes requirements that make it uneconomic to install them. See Isabella Breda, “WA Adopts New Rules to Phase Out Fossil Fuels in New Construction,” Seattle Times, Nov. 29, 2023.

Some cities also have enacted laws, such as New York City’s Local Law 97, that require residential multifamily buildings to replace fossil-fuel heating systems with electric heat pumps.[6]Local Laws of the City of New York for the Year 2019, No. 97.

There are also efforts to convert energy-intensive industrial processes to electricity. For example, although steel can be recycled using electric-arc furnaces, manufacturing raw steel has always required a blast furnace that uses coking coal (metallurgical coal) to manufacture iron. Yet some policymakers wish to force steelmakers to use hydrogen, manufactured via electrolysis,[7]Electrolysis means, in effect, running an electric current through water to break down water molecules into hydrogen and oxygen. When the electricity required is produced from zero-emissions electric generating resources such as wind and solar power, the resulting hydrogen is deemed “green hydrogen.” See Jonathan Lesser, “Green Hydrogen: A Multibillion- Dollar Energy Boondoggle,” Manhattan Institute, Feb. 1, 2024. as a “green” substitute for coke.[8]World Steel Association, “Fact Sheet: Hydrogen (H2)-Based Ironmaking,” undated. Coke is produced through a process called “pyrolysis,” which involves heating coal at high temperatures for long periods of time in the absence of air to produce coal that is almost pure carbon. There is also a push to manufacture cement, which is also energy-intensive, using electricity, instead of using fossil fuels.[9]Casey Crownhart, “How Electricity Could Help Tackle a Surprising Climate Villain,” MIT Technology Review, Jan. 3, 2024.

The stated impetus for these electrification mandates is to reduce carbon dioxide emissions. Regardless of the merits and controversies around that goal (a subject outside the scope of this report), the electrification mandates will require additional electricity supplies and, importantly, new infrastructure to handle the additional demand, especially to ensure that electricity is delivered when consumers want or need it. Because of the enormous scale of U.S. electricity systems and the long planning horizons needed to expand those infrastructures, as well as the long time the systems are expected to operate, for decades utilities have engaged in long-term planning to ensure, as a fundamental goal, that there is enough capability to meet the demands that will arise in the future based on best guesses of future economically driven needs.

However, instead of planning to ensure sufficient generation, transmission, and distribution infrastructure to meet future, higher demands, many utilities, often at the behest of state lawmakers and regulators, are now far more focused on developing policies to “manage” electricity demand to reduce the need for new investment. In addition, it has become more common for electric utilities to implore, induce, or force customers to limit their electricity consumption when demand is greatest or whenever it exceeds available supplies. This is especially true when weather conditions are severe[10]For example, on Aug. 19, 2024, Xcel Energy issued an urgent appeal for its customers in eastern New Mexico, the Texas Panhandle, and the Texas south plains to reduce electricity consumption. See Angel Oliva, “Xcel Energy Declares Energy Alert, Asks Customers to Conserve Energy,” Myhighplains.com, Aug. 19, 2024. but also because some planners have ignored the realities of electricity supply and demand.

The rationale behind these “load management” or “demand side management” policies is to reduce costs. It is often true that devising ways to reduce customers’ consumption of electricity, especially during times of peak demand, reduces direct costs when compared with the costs of investing in new infrastructure. Doing so, however, ignores the direct and indirect costs imposed on electricity consumers. These include direct monetary costs, such as charging much higher prices during peak hours, or physically restricting access to electricity and forcing changes in personal or business behavior. There are also indirect costs, in the form of shifting business operations to nonoptimal times or reducing convenience and comfort for consumers.

Mandating more electrification inherently conflicts with simultaneously limiting new supplies as well as consumer choices. It also runs the risk of not only stifling growth but also violating a long-standing goal of providing society with the electricity it needs. As this report discusses, the solution includes fully incorporating consumer costs into utility planning efforts and de-emphasizing mandatory electrification efforts, which will have no measurable impacts on global climate.[11]The impact of reduced greenhouse gas emissions can be estimated using the “Model for the Assessment of Greenhouse Gas Induced Climate Change” (MAGICC), which is sponsored by the U.S. EPA. A recent study (Richard Lindzen, William Happer, and William A. van Wijngaarden, “Net Zero Avoided Temperature Increase,” June 11, 2024) estimated that the impact of the U.S. achieving net zero emissions by 2050 would be less than 0.01⁰C.

A Brief History of Electric Utility Planning

After World War II ended, electric utility planning was straightforward: the demand for electricity increased at a steady rate each year, and utilities built large, central-station plants, typically coal-fired, to meet that demand. Utilities also built transmission and distribution systems to handle the growth in electricity consumption, ensuring that there was sufficient capacity (generation, transmission, and distribution) to meet their customers’ needs when demand was greatest. Ensuring that regulated utilities built enough capacity to keep the lights on as the economy and customer demand grew was one side of an unwritten regulatory compact: utilities were required to meet customer demand and, in exchange, would be allowed to earn a regulated return on their investments.

Then things changed. In the 1960s, electric utilities began an ambitious program to build nuclear power plants envisioned as clean, low-cost generating resources. However, a lack of a standard design and changing regulations caused construction costs to soar—combined with massive, integrated antinuclear campaigns in the media and the courts. Then, in 1973, the first OPEC oil embargo struck. Oil prices soared and the U.S. economy shrank, which caused the demand for electricity to fall. The second OPEC embargo, in 1979, further crippled the U.S. economy and caused electricity demand to decrease again. The only previous event that caused a decrease in electricity demand was the Great Depression.[12]Historical Statistics of the United States, Series S 32–43.

Suddenly, utilities found themselves building costly new nuclear plants that were not needed. This led to a wave of project cancellations and soaring electric rates as utilities passed many of the costs of these canceled projects on to their customers.

The environmental movement also came to the fore during the 1970s. The 1970 Clean Air Act Amendments imposed stringent emissions standards on fossil-fuel plants, which were almost entirely coal- and oil-fired, for the first time. After the first OPEC oil embargo caused economic havoc, it led to demands to reboot the entire energy industry. The result was comprehensive energy legislation, called the National Energy Act, which consisted of five major statutes and was signed into law by President Carter in 1978.[13]H.R. 8444—A Bill to Establish a Comprehensive Energy Policy, 95th Congress (1977–78). One of those statutes was the Public Utility Regulatory Policies Act (PURPA), which required electric utilities to purchase electricity generated from “alternative” sources—at first mostly wind and small hydroelectric plants—developed by nonutility entities.[14]Public Utility Regulatory Policies Act (PURPA), Public Law 95-617, Nov. 9, 1978.

Regulators set the prices at the utilities’ “avoided costs,” that is, what the regulators determined would be the cost of new generation developed by utilities.

At the same time, and especially in response to the soaring costs of nuclear power plants, environmentalists demanded that utilities focus on conserving electricity to reduce the need for building new plants. They argued that doing so would reduce pollution and be less costly than developing new generating resources. Environmentalists also argued that energy conservation programs would avoid or delay the need to build more transmission lines, install larger substations, and rebuild local distribution lines (the poles and wires that run down streets)

to handle the greater demand. Ironically—given today’s ongoing electrification efforts in many states, including efforts to force consumers to use electric heat pumps for space and water heating rather than natural gas furnaces—in the early 1980s, environmentalists insisted that electric utilities subsidize customers to switch to use natural gas for space and water heating.

The new approach to utility planning—with the primary emphasis on conservation and efficiency— became known as “least-cost planning” and, more recently, as “integrated resource planning” (IRP). The initial idea behind requiring utilities to develop detailed IRPs was to ensure that the costs and benefits of generating resources and energy-efficiency measures would be compared equally. Energy-efficiency advocates argued, and still argue today, that reducing electricity consumption through conservation and improved energy efficiency, termed “negawatts,” is often preferable to adding generating capacity to meet increasing demand, especially when environmental costs are considered. Frequently, however, energy-efficiency measures cost more than advertised, save less energy than advertised, and reduce the quality of the services provided to consumers.[15]Paul L. Joskow and Donald B. Mattron, “What Does a Negawatt Really Cost? Evidence from Utility Conservation Programs,” Energy Journal 13, no. 4 (October 1992): 41–74; “Briefing: The Elusive Negawatt,” The Economist, May 8, 2008.

Compact fluorescent bulbs, for example, were one of the earliest large-scale energy-efficiency measures that utilities were required to subsidize, but many consumers complained that their lighting quality was inferior to incandescent bulbs. Similarly, energy and water efficiency, as well as standards for clothes washers and dishwashers developed by the U.S. government, have elicited consumer complaints about their poor performance.

The focus on improved energy efficiency has continued ever since, with the additional requirement that utilities acquire increasing quantities of supplies from renewable resources, especially wind and solar power.[16]Energy Journal 13, no. 4 (October 1992): 41–74; “Briefing: The Elusive Negawatt,” The Economist, May 8, 2008. Some states, such as New Jersey, have enacted laws requiring electric (and gas) utilities to reduce peak demand by a certain percentage annually.[17]New Jersey Clean Energy Act, P.L. 2018, c. 17, May 23, 2018.

Moreover, IRPs often incorporate estimates of the environmental benefits of reduced greenhouse gas emissions using estimates of the “social cost of carbon” (SCC), that is, the estimated benefit of reducing carbon and other greenhouse gases. These benefits tip the scales further toward energy-efficiency programs and wind and solar generation.[18]A discussion of the appropriateness of using “social cost of carbon” (SCC) values in cost-benefit analyses of energy conservation programs is beyond the scope of this report. Since the 1979 accident at the Three Mile Island nuclear plant, new nuclear plants have not been considered because of past cost overruns, concerns about nuclear waste disposal, and fears of other accidents. The only new nuclear units to be placed in service in the last 30 years are the Vogtle Units 3 and 4, owned by Southern Company, which experienced long construction delays and cost overruns. Unit 3 began commercial operation on July 31, 2023, and Unit 4 began operation on Apr. 29, 2024. Ironically, in September 2024, Microsoft Corporation announced an agreement with Constellation Energy to restart the 835 MW Three Mile Island Unit 1 nuclear plant, which shut down in 2019. The plant is expected to be in operation by 2028.

The Growing Reliance on “Managing” Electricity Consumption

Although improved energy efficiency was the initial focus of “negawatt” advocates, a new utility planning paradigm has evolved: using prices, direct controls, and exhortation to reduce electricity demand. Not surprisingly, the effects on consumers are unwelcome.

Improved energy efficiency means using less energy to obtain the same services (heat, hot water, lighting, etc.). This is a “better mousetrap” outcome, which benefits everyone (except mice) as long as the cost of acquiring the additional energy efficiency is less than the expected reduction in energy costs. Energy management focuses on reducing consumption and

shifting it to times when overall demand is lower. A residential customer installing a more efficient water heater is an example of the former, whereas that same consumer doing the laundry in the middle of the night instead of the early evening, or lowering the thermostat in winter, is an example of the latter[19]In a Feb. 2, 1977, speech, President Carter advised Americans to lower their thermostats and wear sweaters. (see box, “Estimating the Cost of Energy-Efficiency Measures: Theory and Reality”).

Despite the detailed planning and emphasis on energy conservation and efficiency to ensure a least-cost future, electricity costs continue to increase.

First, energy-efficiency measures almost always save less energy than engineering estimates calculate. One reason is that the lifetimes are shorter than expected because the hardware is less reliable, such as LED bulbs that fail much sooner than expected.

Estimating the Cost of Energy-Efficiency Measures: Theory and Reality

The costs of alternative electricity resources, including efficiency resources, are often compared using what is called the “levelized cost of energy” (LCOE). In effect, LCOE estimates are similar to how a mortgage is calculated. The costs of a new power plant are added up by a potential investor (the initial investment cost, plus the cost of future operations and maintenance, etc.) and discounted to the present day. Next, the assumed energy production (or savings) is determined over the life of the resource and also discounted to the present day. The present value cost divided by the present value production (or savings for energy-efficiency measures) equals the LCOE.

As with many things, the devil is in the details. Because the actual savings from energy-efficiency measures typically cannot be measured directly, LCOE estimates for energy-efficiency measures are usually based on engineering estimates of savings. For example, adding insulation to a home’s walls will reduce the rate at which heat escapes or, in summer, enters. The reduction in energy use will depend on how much insulation is added, the type of insulation (e.g., batts, Styrofoam, blown-in cellulose), the number of windows and doors, the rate of deterioration of the insulation (e.g., settling of cellulose insulation), and, importantly, the behavior of the occupants, who may decide to increase their comfort levels.

The uncertainties in these calculations are numerous. The lifetimes of energy-efficiency measures—more efficient lights and appliances, more efficient motors, etc.—can vary

tremendously: a new LED lightbulb may be advertised as having a 50,000-hour life but can still burn out after 500 hours or be operating after 100,000. The price of electricity over the life of the energy-efficiency measure is uncertain. Most important for measures with multiyear expected lifetimes is the discount rate used to convert future costs and benefits to present-day ones. Frequently, energy-efficiency proponents use unrealistically low “societal” discount rates to convert future costs and benefits, rather than use consumers’ or businesses’ own discount rates, which tend to be much higher. (For example, an investment that will break even after 25 years may be of little value to someone who is 80 years old.) Because most of the costs are the initial investment, a lower discount rate will increase the present value benefits, thus reducing the overall LCOE.

Because many energy-efficiency measures are subsidized, either by local utilities or through tax credits, the true costs are often replaced with the subsidized cost estimates—in effect, assuming the subsidies are “free” money. This leads to inefficient allocation of capital: more spent on investments with lower returns and less spent on investments with higher returns.

Many cost estimates also ignore the direct and indirect costs to consumers. The purchase price of a new furnace, for example, may be subsidized, but the cost to hire a contractor to install it generally is not. A new, energy-efficient dishwasher might cost less to operate per load, but might wash dishes poorly.

Second is a phenomenon known as “Jevon’s Paradox,” named after the 19th-century economist William Stanley Jevons. Improving the efficiency of a resource, such as a more efficient air conditioner or furnace, lowers the cost of providing that service, which might be called “indoor comfort.” As Jevons noted, when the cost of providing a good or service falls, consumption increases. Hence, consumers set the thermostat lower in the summer and higher in the winter. This phenomenon is known as the “rebound effect.”[20]One recent study of cities in China found that the rebound effect amounted to almost half the energy savings for residential consumers. See Xiaoling Ouyang et al., “How Does Residential Electricity Consumption Respond to Electricity Efficiency Improvement? Evidence from 287 Prefecture-Level Cities in China,” Energy Policy 171 (December 2022): 113302. A recent study of electricity consumption in European countries estimated that, over the long run, the rebound effect averaged 43% of estimated savings. See Camille Massie and Fateh Belaid, “Estimating the Direct Rebound Effect for Residential Electricity Use in Seventeen European Countries: Short and Long-Run Perspectives,” Energy Economics 134 (June 2024): 107571. (A similar impact has been observed with residential customers who install solar panels on their homes.)[21]Matthew E. Oliver, “Tipping the Scale: Why Utility-Scale Solar Avoids a Solar Rebound and What It Means for U.S. Solar Policy,” Electricity Journal 36, no. 4 (May 2023): 107266. When the cost of obtaining energy services falls, more services are consumed. And retiring existing, depreciated fossil-fuel generating resources and replacing them with new solar and wind generation raises electric rates, owing to how those rates are calculated (see Appendix, “How Retail Electric Utility Rates Are Set”). Moreover, in addition to any subsidies that electric ratepayers must pay, owing to their intermittency wind and solar generation, additional backup supplies, along with electric storage, are required to compensate for periods when no wind and solar electricity is generated.

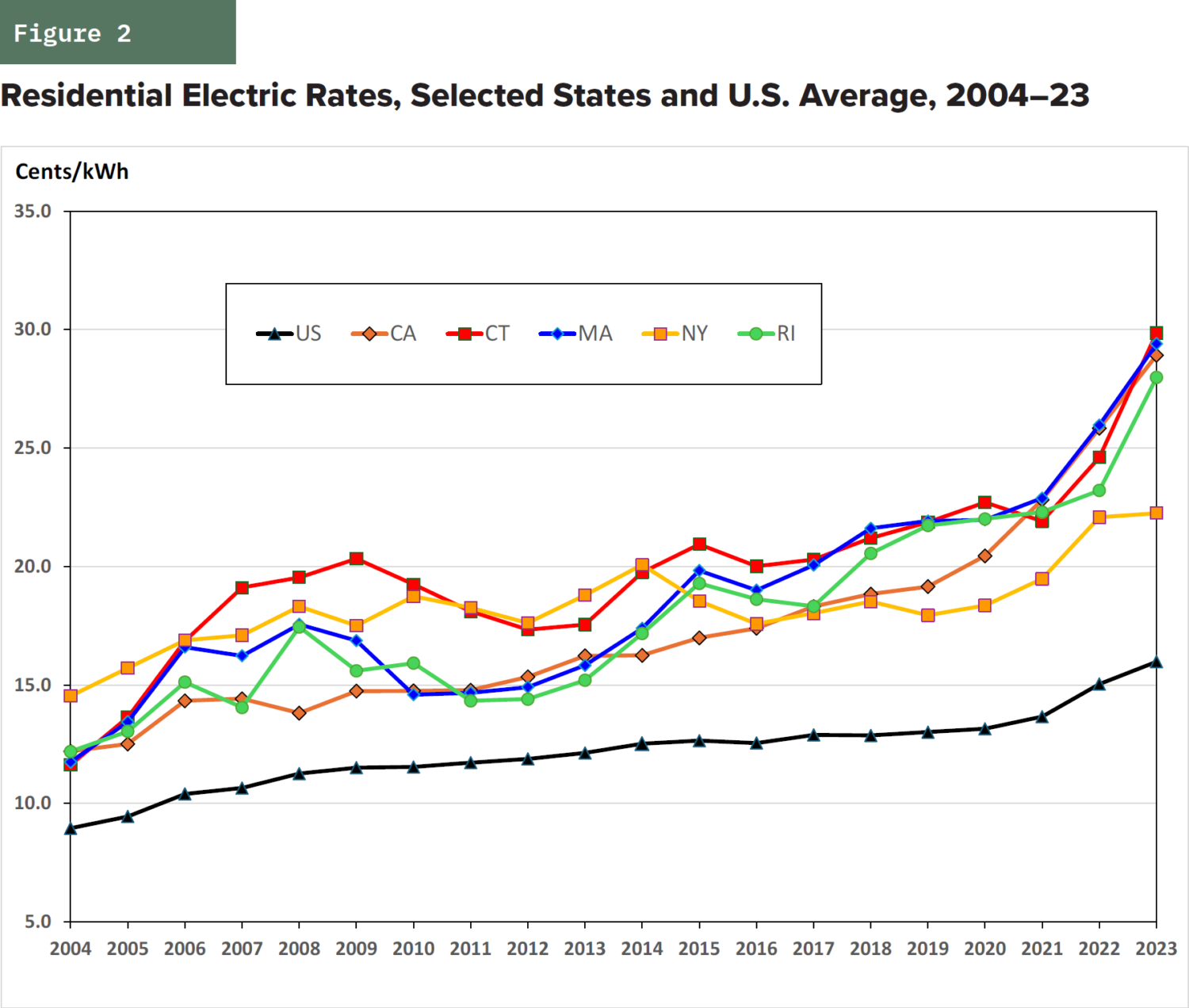

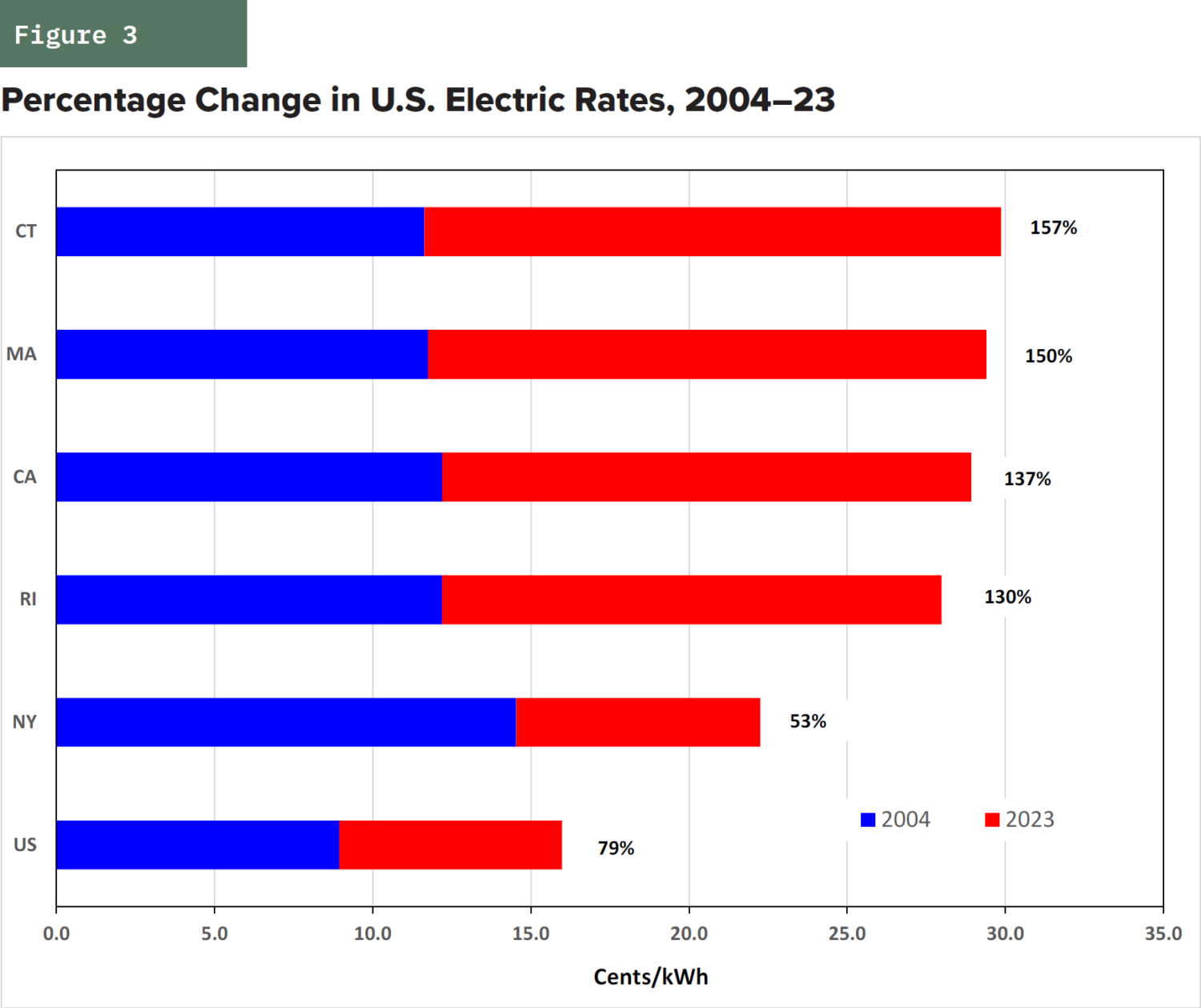

Over the 20-year period 2004–23, electric rates, especially for residential customers, have soared in many states, especially since 2020 (Figure 2). Moreover, the disparity between electric rates has increased over time. The highest rates, averaging just under 30 cents per kWh in 2023, and the largest percentage increases have been in states that have zero-emissions electricity mandates (Figure 3).

As traditional generating resources have been retired to meet state and federal environmental mandates, planners have begun to rely on the second type of conservation: curtailing consumption to reduce peak demand and shifting consumption to hours when demand has been traditionally low, such as late at night. This has been accomplished in various ways: by surge pricing (i.e., raising rates when demand is high and supply is low—a common practice of airlines and hotels); direct utility control of customers’ electric equipment; and monetary incentives to allow utilities to shut off the electricity to certain customers when required.

The most common approach to managing demand, called “time-of-use” (TOU) pricing, adjusts prices— charging consumers much more for using electricity when demand typically peaks (in the early morning and early evening hours) and lowering prices at off-peak times (see box, “Alternative Forms of TOU Pricing”). TOU pricing is a form of surge pricing. The prices charged can be set by regulators (e.g., setting rates during administratively defined peak load hours to discourage consumption) or in real time, based on wholesale market prices. In either case, higher prices provide an incentive—albeit a negative one—for consumers to shift their electricity consumption to hours when overall electricity demand is lower, such as running the dishwasher and doing the laundry at midnight instead of the early evening.

Although many economists consider TOU pricing to improve economic efficiency by ensuring that consumers receive appropriate price signals, doing so imposes hardship on them. This is especially the case with real-time pricing because consumers have no way of knowing what those prices are.

Alternative Forms of TOU Pricing

Three forms of TOU pricing for regulated utilities have been developed. Before real-time meters were developed, some regulators allowed utilities to impose rates that differed by season of the year, depending on when demand was greatest. For summer-peaking utilities, summer rates were set higher than winter rates, and vice versa for winter-peaking utilities. The seasonal difference in rates was designed to reflect the different costs of the generating resources (coal, natural gas, oil, etc.) that were needed to meet demand, with the rates charged to customers reflecting the higher costs of generators used only in hours when demand was greatest.

With the advent of meters that could record customers’ consumption at different times, TOU pricing could reflect seasonal differences in demand and differences by hour, similar to how phone companies used to set rates for long-distance calls. For example, utilities could develop one set of rates for peak hours (e.g., weekdays, 7 a.m.–11 p.m.) and another, lower-cost set of rates for off-peak hours (weekends and 11 p.m.–7 a.m.).

Today, TOU pricing can be either of these, but advanced metering allows utilities to impose real-time TOU pricing, in which prices charged to consumers reflect real-time wholesale market prices. Real-time TOU pricing is considered by some economists to be ideal from the standpoint of economic efficiency because the prices reflect the true marginal (or incremental) cost of electricity consumption at all times. However, those prices aren’t known until after the fact.

In organized wholesale markets, such as PJM Interconnection, which coordinates generating plants and a wholesale market across 14 mid-Atlantic states (including the District of Columbia) and stretches west to parts of Illinois, market-clearing prices (called “real-time” prices) are determined based on actual demand and the generators used to meet that demand. It is a complex process, which means that electricity consumers do not know the actual prices that they will be charged until they receive their next bill. Thus, consumers cannot know the real-time price in real time.

Most consumers have reacted unfavorably to TOU pricing for two reasons. First, many have seen their electricity bills soar. For example, Southern California Edison customers complained of monthly bills upward of $1,000.[22]Peter Wilgoren, “Southern Californians Hit with Skyrocketing Electricity Bills,” KTLA 5, Aug. 16, 2024. Even for California, which has some of the highest electricity rates in the country, bills have been jarring, and many lower-income consumers cannot pay them. (Subsidized rates for lower-income consumers are paid for with higher rates for everyone else.) Last summer, for example, residential customers of Southern California Edison faced peak TOU rates as high as 75 cents/kWh.[23]Southern California Edison, Residential Tariff SCE-TOU-D-5. These rates are prohibitively expensive for lower-income customers, especially those who live inland where summer temperatures often exceed 100 degrees.

Similarly, San Diego Gas & Electric most recently charged residential customers 56.1 cents/kWh between the hours of 4 p.m. and 9 p.m. during the summer months.[24]San Diego Gas & Electric, Time of Use Plans. Moreover, on “Reduce Your Use Event” days, which the company can declare 18 times per year, it charges residential customers $1.16/kWh during these

same hours. Running a typical home air conditioner, which draws three kilowatts, would cost a residential customer over $17 for those five hours.

Second, and the focus of this report, TOU pricing increases customer inconvenience and can decrease physical well-being. Consumers in California’s Central Valley who cannot afford to turn the air conditioner on during the day when summer temperatures typically exceed 100 degrees suffer physically.

Another approach to reducing electricity demand is direct load control (DLC), in which a customer’s local electric utility can remotely shut off appliances such as air conditioners and water heaters to limit peak consumption. DLC has also been proposed to reduce increased electricity demand caused by charging electric vehicles, which—if mandates for EVs to account for increasing shares of new vehicle sales remain in force—will require large investments in supporting infrastructure.[25]Jonathan Lesser, “Infrastructure Requirements for the Mass Adoption of Electric Vehicles,” National Center for Energy Analytics, June 2024.

Numerous academic studies have proposed that EV charging should be “managed,” either by limiting the amount of power that can be drawn by a charger or

preventing a charger from operating in certain hours, to reduce peak demand.[26]Elaine Hale et al., “Electric Vehicle Managed Charging: Forward-Looking Estimates of Bulk Power System Value,” National Renewable Energy Laboratory, NREL/TP-6A40-83404, September 2022. For example, Allegro, the Dutch EV charging station operator, has imposed a “blocking fee” on EVs that are charged for more than 45 minutes in order to “ensure a fairer distribution,” according to the company, “of the charging infrastructure.”[27]Pierre Gosselin, “Electricity Rationing at Charging Stations Due to Limited Charging Infrastructure in Europe,” NoTricksZone, July 14, 2024.

Many electric utilities have instituted load-control programs, such as lockouts on air-conditioning units in summer when electricity demand peaks.[28]Ahmad Faruqui, “Direct Load Control of Residential Air Conditioners in Texas,” Public Utility Commission of Texas, Oct.25, 2012. Load controls have also been applied to electric water heaters.[29]Herman Trabish, “Utilities in Hot Water: Realizing the Benefits of Grid-Integrated Water Heaters,” Utility Dive, June 20,2017. The justifications for these programs are that they can reduce greenhouse gas emissions[30]See, e.g., Peter Alston and Mary Ann Piette, “Electric Load Flexibility for the Clean Energy Transition,” Notre Dame Journal on Emerging Technologies (NDJET) 1, no. 1 (2020): 92–149; U.S. Department of Energy, “Climate Change and the Electricity Sector: Guide for Climate Change Resilience Planning,” September 2016. and save the power grid.[31]Jon Reed, “The US Power Grid Has a Problem. Your House Could Help Solve It,” CNET, Aug. 18, 2024.

Unsurprisingly, consumers are wary of DLC. They don’t like the idea that the local utility can control their power consumption and determine when they can turn on their air conditioner, dry their clothes, or charge their EV. Consumers are also concerned about their privacy, fearing that DLC will enable their local utility effectively to “spy” on them.[32]Roy Dong et al., “Quantifying the Utility-Privacy Tradeoff in Direct Load Control Programs,” arXiv, May 26, 2015; Karen Stenner et al., “Willingness to Participate in Direct Load Control: The Role of Consumer Distrust,” Applied Energy 189 (March 2017): 76–88.

Still another mechanism for managing electricity use, used primarily for industrial and large commercial customers, is interruptible rates—offering a customer a lower rate in exchange for agreeing to allow the utility to shut off the customer’s electricity when overall demand exceeds a certain level. Some utilities—notably, California’s investor-owned utilities—have resorted to large-scale power shutoffs, called Public Safety Power Shutoffs, to reduce the likelihood of wildfires caused by electrical equipment failures.

The Paradox: Mandating Increased Reliance on Electricity but Limiting Access to It

An increasing number of state and federal policies mandate the electrification of virtually all end uses to reduce carbon emissions from fossil fuels. For example,

18 states have adopted California’s Advanced Clean Car II rules requiring increasing percentages of new vehicle sales to be EVs, reaching 100% for the 2035 model year. In 2019, New York City enacted Local Law 97, requiring

all residential buildings larger than 25,000 square feet to convert to electricity by 2035.[6]Local Laws of the City of New York for the Year 2019, No. 97. Other states, such as New Jersey, seek to convert all residential heating to electricity.[34]2019 New Jersey Energy Master Plan, 157–72.

Together, mandates for EVs and the electrification of space and water heat will likely double electricity consumption and peak demand. Coupled with policies that mandate supplying the nation’s electricity with zero-emissions resources—notably, intermittent wind and solar power—not only will electricity prices continue to increase but the ability to meet consumers’ increased demand will become more problematic.[35]See, e.g., New York Independent System Operator (NYISO), 2024 Power Trends: The New York ISO Annual Grid andMarkets Report.

The OPEC oil embargoes clearly demonstrated that energy availability and cost are key drivers of economic growth. In the decades since, electricity has taken on greater importance in the U.S. economy. For example, a recent report by Goldman Sachs forecasts that electricity consumption for data centers and artificial intelligence will increase from about 150 TWh in 2023 to about

400 TWh in 2030.[36]Goldman Sachs, “AI Is Poised to Drive 160% Increase in Data Center Power Demand,” May 14, 2024. (By comparison, total U.S. electricity sales in 2023 were 3,874 TWh.)[37]EIA, Electricity Data Browser. Although growth in data centers and AI has been a recent focus of the press, electricity demand growth in the other sectors of the U.S. economy—residential, commercial, industrial, and transportation—will likely be even larger.[38]Robert Walton, “US Electricity Load Growth Forecast Jumps 81% Led by Data Centers, Industry: Grid Strategies,” Utility Dive, Dec. 13, 2023.

This is why an increasingly crucial issue is the availability of sufficient electricity to meet peak demand, termed “reliability.” There are different ways to measure the reliability of an electric system; but for most of us, it comes down to whether electricity is available whenever we want to use it. Among the nation’s electric grid operators, such as the Midcontinent Independent System Operator (MISO), PJM Interconnection (PJM), and the New York Independent System Operator (NYISO), the twin policies of forcing greater electrification and requiring the electricity demand to be met primarily with intermittent wind and solar generation are creating concerns that reliability will suffer, leading to more frequent blackouts.[39]Paul Hibbard, Joe Cavicchi, and Grace Howland, “Fuel and Energy Security Study Results and Observations,” NYISO, Sept. 26, 2023; Midcontinent Independent System Operator, “MISO’s Response to the Reliability Imperative,” February 2024; and PJM Interconnection, “Energy Transition in PJM: Resource Retirements, Replacements & Risks,” Feb. 24, 2023.

Higher-cost, less available electricity is incompatible with mandates for an all-electric future. The OPEC oil embargoes of the 1970s only too clearly demonstrated the link between energy prices and economic growth. By increasing the cost to produce most goods and services, the embargoes exacerbated inflation and caused the U.S. economy to fall into recession. The same relationship holds for electricity, especially as electricity becomes the “fuel” for more end uses: higher electric prices mean reduced economic growth, leading to a lower standard of living and greater hardship for consumers.

Rather than address this economic truism, policymakers wish to control costs by restricting access to the electricity that they insist consumers use. Some environmentalists go even further: they advocate “degrowth” policies to reduce energy consumption to combat climate change by lowering U.S. living standards.[40]Jason Hickel et al., “Degrowth Can Work—Here’s How Science Can Help,” Nature, Dec. 12, 2022; Jesse Stevens, “The Relentless Growth of Degrowth Economics,” Foreign Policy, Dec. 17, 2023.

Moreover, perhaps for political reasons, some grid operators have been unwilling to clarify the difficulties, preferring to gloss over them. For example, NYISO, in its 2024 Power Trends report, claims that “unprecedented levels of investment in Dispatchable Emission-Free Resources (DEFRs) will be necessary to reliably deliver sufficient energy to meet future demand.”[41]NYISO, 2024 Power Trends, 26. (The most commonly envisaged DEFRs are turbine generators that burn green hydrogen instead of natural gas or fuel oil.)[42]For additional discussion, see Lesser, “Green Hydrogen.”

The NYISO report estimates that to maintain reliability between 26,000 and 29,000 megawatts (MW) of DEFRs will be needed by 2030 (by comparison, a typical large nuclear generator is about 1,000 MW). The report adds, as an aside, that these are “not yet available on a commercial scale.”[43]Ibid., 5. In fact, hydrogen-burning generators do not even exist.

Economic immiseration is not a policy the public will willingly embrace, as recent European experience shows. Assuming that a nonexistent generating technology will be invented, commercialized, and deployed in the next few years in order to ensure a reliable electric system is magical thinking, if not delusional. Nevertheless, many politicians and policymakers seem oblivious to these physical realities. The result will be greater consumer inconvenience, higher costs, lower economic growth, and greater economic hardship. While some may consider such an outcome to be a feature and not a bug, presumably most Americans will not. Electricity consumption can be managed, or even reduced, by restricting access to electricity when customers want it or by making that access prohibitively costly. In either case, the economic costs of doing so are real and should be recognized. Hence, a least-cost future should account for the costs of electricity resources themselves and the indirect costs to consumers and businesses caused by inadequate electric infrastructure.

A final consideration in the push for electrification is the cost of abandoning useful fossil-fuel infrastructure. For example, EV mandates will require developing a charging infrastructure (including upgraded local distribution systems, additional transmission lines, and larger substations) that will cost trillions of dollars.[44]Lesser, “Infrastructure Requirements for the Mass Adoption of Electric Vehicles.” That infrastructure eventually will eliminate the economic value of today’s existing vehicle fueling infrastructure (e.g., refineries, storage tanks, delivery trucks). Similarly, mandates to electrify buildings will wipe out the value of the existing heat and hot water infrastructure. Forcing the abandonment of useful and valuable infrastructure is an additional cost that would be accounted for in any accurate cost-benefit analysis.

The Economic Costs of Insufficient Electric Infrastructure

The first, and still traditional definition of insufficient electric infrastructure is the likelihood of blackouts that result from an inability to meet electricity demand at any given time. Even though electric system planners ensure that there is backup (called “reserve”) capacity available to meet demand in case of a sudden operating failure of a generating plant, there may be times when enough reserve capacity is unavailable or an outage is called by a different type of failure, such as a large transmission line that delivers electricity to a city. For example, in February 2021, Texas experienced a multiday blackout caused by severe winter storms that led to the shutdowns of numerous generators.[45]Ning Lin et al., “The Timeline and Events of the February 2021 Texas Electric Grid Blackouts,” University of Texas Energy Institute, July 2021. The second definition of insufficient electric infrastructure, which is the focus of this report, is based on the need to manage consumers’ electricity consumption to reduce the need for additional infrastructure investment while ensuring that a blackout does not occur.

New electric generating and high-voltage facilities take years—and, in some cases, decades—to permit and construct. Although permitting reform can speed up the process, meeting future load growth requires careful analysis to anticipate future needs. Given electrification policies imposed by individual states and the federal government, planning to meet the resulting increase in electricity demand ought to have been a basic requirement.

Yet it seems that planners have recently been caught off guard by new load growth, such as increased demand from data centers. In October 2023, for example, Southern Company “discovered” that electricity demand would exceed supply by 2025, thanks to new manufacturing plants and data centers that have been encouraged to locate in Georgia.[46]Emily Jones and Gautama Mehta, “Why Mississippi Coal Is Powering Georgia’s Data Centers,” Grist.org, Aug. 27, 2024. Just one year after the company filed its IRP in 2022, it claimed that updated load growth projections were 17 times greater than the previous year’s projections[47]“Georgia Power Updates IRP, Seeking Additional Generation Resources,” Power Engineering, Oct. 27, 2023. and would require buying power, instituting more load-management programs, and building new capacity.

Inadequate infrastructure has direct and indirect costs. An inability to serve new loads, especially those of new commercial and industrial customers, means reduced economic growth. For example, rather than locating in a new region or expanding in an existing one, manufacturers will often locate elsewhere where electricity is available—and at a lower cost. And, as demand outstrips supply, prices increase, initially in wholesale markets, which then filters down to retail customers served by local distribution utilities.

The recent experience in PJM, the grid operator that coordinates generation and transmission for a wholesale electric market that spans 14 states and the District of Columbia, is instructive. In a recent capacity market auction,[48]The PJM capacity market is designed to compensate generators for being available when needed. In effect, it pays for “iron in the ground.” The capacity of a generating resource is the maximum amount of electricity it can generate at any given time. For example, a nuclear power plant with a “nameplate” capacity (maximum output) of 1,000 MW can produce 1,000 MWh of electricity over a one-hour period: 1,000 MW x 1 hour = 1,000 MWh. market-clearing prices rose by an order of magnitude, from $28.92 per MW-day to $269.92 per MW-day.[49]Ethan Howland, “PJM Capacity Prices Hit Record Highs, Sending Build Signals to Generators,” Utility Dive, July 31, 2024. In several submarkets that face constraints on importing electricity, such as in parts of Maryland, the price hit the maximum allowable price of $466.35 per MW-day. Because of the higher capacity prices, retail consumers will likely pay an additional $14.7 billion over the 2025–26 delivery year (June 1–May 31).[50]“Following Capacity Price Spike, Mid-Atlantic States Call for PJM to Change Rules,” Power Engineering, Nov. 21, 2024. Although the higher prices are intended to signal the need for additional supplies, it will take years for new generators to be built. In the meantime, higher electricity prices will

The Traditional Measure of Electric Infrastructure Adequacy

Historically, utility planners have focused on the infrastructure needed to meet consumer demand at all hours by estimating the likelihood of an outage because of insufficient infrastructure. Although there are different ways to estimate such likelihoods, they are typically expressed as the likelihood that an unplanned event (e.g., a generator that stops operating suddenly or a downed transmission line) makes it impossible to meet consumer demand. This is called “loss of load probability” (LOLP) or “loss of load expectation” (LOLE). Electric system planners use complex models to determine LOLE and LOLP values.

The more redundancy built in to the system (e.g., surplus generating capacity on standby, extra transmission line capacity) the lower the LOLE and LOLP values. But that redundancy comes with higher costs. Hence, there is a trade-off between the value of ensuring adequate infrastructure and reliability for consumers and the costs of providing it. This means that there is an optimal level of reliability where total costs are lowest.[51]For a discussion, see Will Gorman, “The Quest to Quantify the Value of Lost Load: A Critical Review of the Economics of Power Outages,” Electricity Journal 35, no. 8 (October 2022): 107187.

In practice, identifying this optimal level of reliability is difficult, if not impossible, because different consumers and different types of consumers place different values on reliability. Moreover, reliability has characteristics of what economists call “public goods.” A grid operator cannot provide different levels of reliability to different local utilities based on their willingness to pay, and local utilities cannot provide different levels of reliability to their customers.[52]For example, if a tree branch knocks down a local distribution line, everyone loses power. It is not possible for customers to pay the local utility so that, if such an event occurs, they are spared the loss of power. (Of course, customers can install a backup generator so that, in the event of an outage, they can obtain power.)

For example, a hospital will place a high value on reliability because a lack of power can mean the difference between life and death for some patients. (This explains why most hospitals have backup generators.) Residential customers typically have lower values, especially if an outage lasts only a few seconds or minutes. In most cases, however, it is difficult to measure these values directly. A manufacturer of delicate and costly electronic equipment will place an extremely high value on reliability if an outage could damage that equipment.

When outages last a long time, the costs become very large indeed. The Public Safety Power Shutoffs (PSPS) initiated by electric utilities in California (and other western states) to reduce the risk of wildfires caused by their equipment—such as the faulty equipment that caused the 2018 Camp Fire in California, which killed 85 people—have typically lasted one to two days, although some have been as long as five days. The impacts on customers—spoiled food, closed businesses, lost wages, and so forth—are viewed simply as collateral damage and compensated minimally. For example, PG&E customers who lose power for three days receive a $25 rebate on their electric bill two months after a PSPS event, but shutoffs of less than two days are not compensated at all. (PG&E intends to spend billions of dollars to underground most of its electric system to reduce wildfire risk. Doing so will raise customer rates, perhaps as much as 50%.) Similarly, rolling blackouts, which are used to address the lack of sufficient generating supplies, can shut down manufacturers, resulting in millions of dollars in lost output, to say nothing of the hardships imposed on individuals.

Rising Outage Frequency

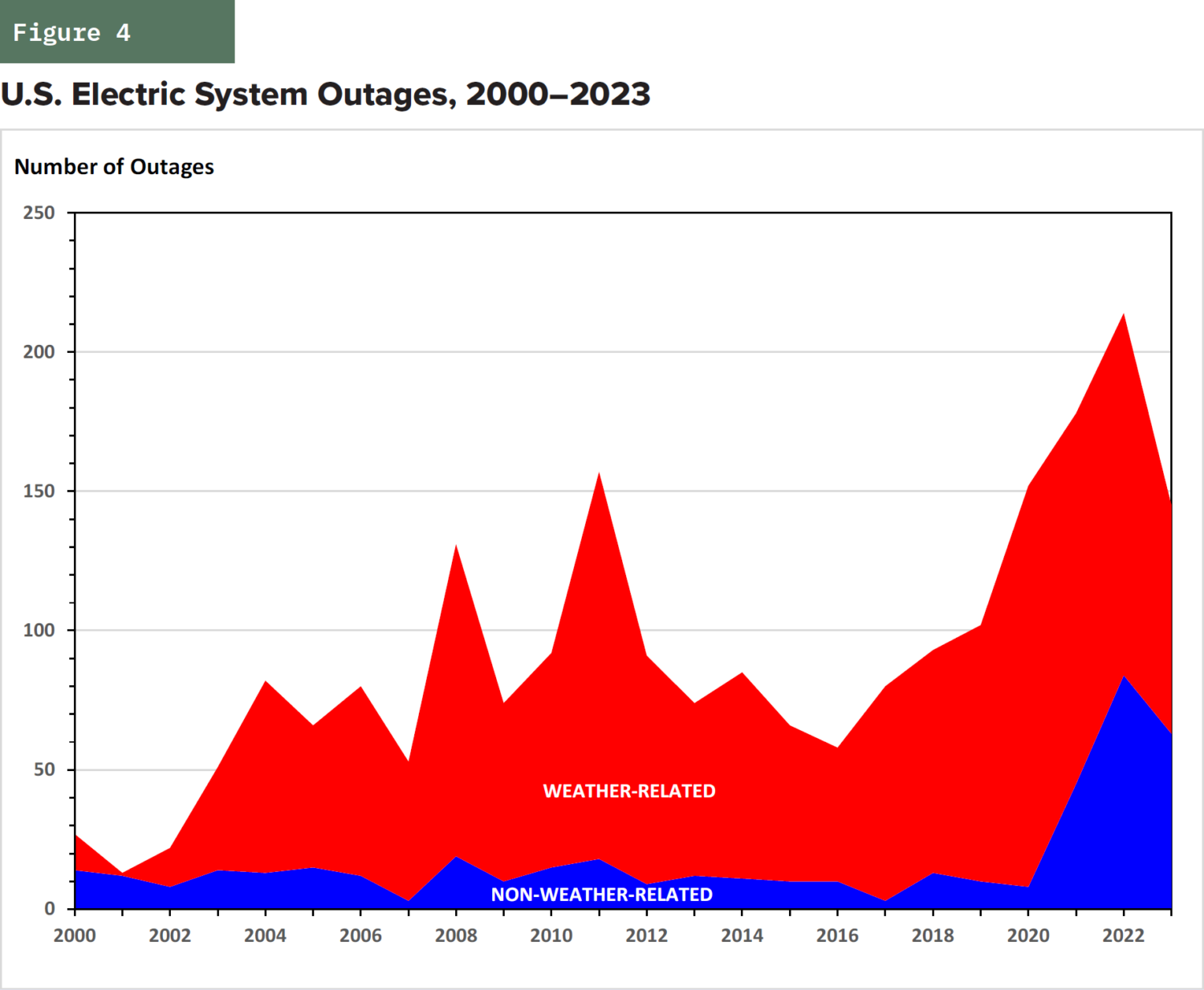

The frequency of both weather-related and non-weather-related outages in the U.S. has increased over the last two decades, and especially since 2020. Although some claim that the increase in weather-related outages has been caused by climate change,[53]Climate Central, “Weather-Related Power Outages Rising,” Apr. 24, 2024. the time frame (24 years) is too short for such claims to have any statistical validity. As shown in Figure 4, on an annual basis, weather-related outages are highly variable. Although the annual number of such outages averaged just over 70 for this period, the number of such outages has ranged from a low of just 1 in 2001 to 144 in 2020.

The more interesting trend concerns outages not related to weather. Between 2000 and 2020, these averaged about 11 per year, with little variation. However, in the most recent three years, non-weather-related outages averaged 64 per year. These include five load-shedding events, where utilities were forced to shut off power to certain customers because of inadequate supplies. (There has also been an increase in outages caused by cyberattacks and vandalism.)

Measuring the Cost of Outages

The most common measure used to estimate the value of unavailable electric service is called the value of lost load (VOLL),[54]There is a large literature on the economic value of electricity reliability. Several survey pieces include Joseph Eto et al., “Scoping Study on Trends in the Economic Value of Electricity Reliability to the U.S. Economy,” Electric Power Research Institute, June 2001; Thomas Schröder and Wilhelm Kuckshinrichs, “Value of Lost Load: An Efficient Economic Indicator for Power Supply Security? A Literature Review,” Frontiers in Energy Research 3, no. 55 (December 2015): 1–12. See also Gorman, “The Quest to Quantify the Value of Lost Load.” which is typically expressed in dollars per kilowatt-hour ($/kWh). VOLL can be considered an estimate of a customer’s willingness to pay to avoid losing electricity for a given period or a customer’s willingness to accept compensation for a service interruption.[55]Purists and behaviorists will note that willingness-to-pay typically is less than willingness-to-accept payment. For the purposes of this report, that difference is not important. VOLL depends on numerous factors, including the type of customer (residential, commercial, or industrial), the duration of an outage (generally, as the duration of an outage increases, so does VOLL), the time of year, the number of interruptions the customer has experienced, lost business revenues, and equipment damage. Hence, there is no single VOLL value, which makes establishing the optimal level of reliability more of a guessing game than an analytical exercise. Moreover, it is difficult for an electric utility to provide different levels of reliability to different customers. For example, if a power line is knocked down in a storm, everyone along that line is affected. Furthermore, restoring power after widespread outages owing to damages after a storm cannot be done based on customers’ willingness to pay; it must be done based on the physical structure of the electric system.

There are many ways to measure VOLL and numerous estimates. The ways include macroeconomic estimates based on economic output, survey data (i.e., asking customers how much they would be willing to pay to forgo an outage of a specific duration or what they would be willing to accept as compensation for an outage of a specific duration); “bottom-up” analyses that estimate the costs of providing backup electricity, such as with a generator; adding up the costs of an outage (e.g., the value of spoiled food, lost business revenues, lost manufacturing value); and revealed choice estimates, which infer VOLL based on the choices consumers and businesses make, such as a business signing a contract with its local utility that allows the utility to interrupt service for a maximum time.[56]A survey of methodologies and previous studies can be found in Public Utility Commission of Texas, “Review of Value of Lost Load in the ERCOT Market,” Dec. 15, 2023. See also Severin Borenstein, James Bushnell, and Erin Mansur, “The Economics of Electricity Reliability,” Journal of Economic Perspectives 37 (Fall 2023): 81–106. The authors argue that advances in technology mean that reliability need not be treated as a public good.

Average VOLL Based on Macroeconomic Measures

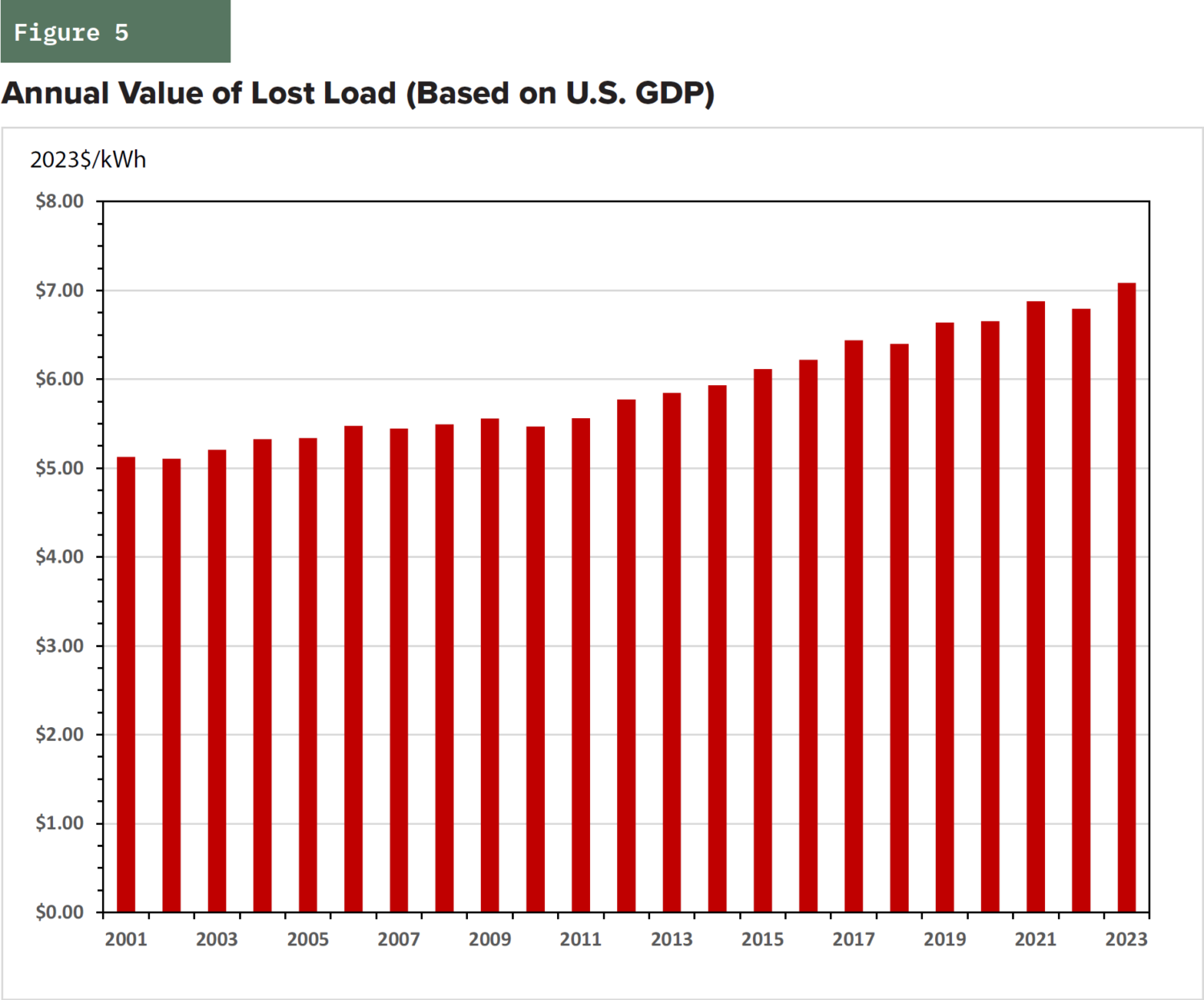

The simplest estimates of VOLL used macroeconomic values (e.g., gross domestic product [GDP], gross value added) divided by total electric consumption. These measures provide a single estimate of electricity’s value to the U.S. economy. For example, in 2023, U.S. retail electricity sales totaled 3,874 TWh and GDP was about

$27.4 trillion. The resulting average VOLL is $7.07 per kWh (Figure 5).[57]This value excludes electrical losses, which do not contribute to GDP. This represents a lower-bound value based on the assumption that the economic value of electricity used must exceed its cost. In other words, if consumers are willing to purchase electricity, they must place a higher value on that electricity than the price they pay. As Figure 5 also shows, as measured by economic output per kWh, VOLL has increased by almost 40% in real (inflation-adjusted) terms since 2001.

Ironically, mandated electrification will tend to reduce estimates of VOLL based on GDP by increasing electricity consumption while reducing economic growth. If businesses and consumers prefer to use fossil fuels, then forcing them to use electricity that has a lower economic value (otherwise, electrification would be voluntary) increases the cost of goods and services that now rely on electricity. These higher costs ripple through the economy, reducing economic growth, incomes, and jobs. Hence, increased electricity consumption coupled with lower GDP means lower GDP per kWh of electricity.

Furthermore, although a GDP-based VOLL is straightforward to calculate, it does not differentiate between different types of customers or different types of outages. To do that, detailed survey data typically are required.[58]Michael Sullivan et al., “Estimating Power System Interruption Costs: A Guidebook for Electric Utilities,” Lawrence Berkeley National Laboratory, July 2018; appendices B–D provide examples of surveys for residential, small commercial, and large industrial customers, respectively. For example, a 2013 study for different groups of customers in Austria estimated VOLL for a one-hour outage between $3.40/kWh and $22.25/kWh (2012$), depending on the time of day and season.[59]Johannes Reichl, Michael Schmidthaler, and Friedrich Schneider, “The Value of Supply Security: The Costs of Power Outages to Austrian Households, Firms, and the Public Sector,” Energy Economics 36 (March 2013): 256–61. (The article reports values in euros/kWh. These have been converted using the current euro–US dollar exchange rate.)

Another approach is to estimate the cost of backup generation or “bottom-up” analyses that add up the costs of spoiled food, lost business revenues and wages, and so forth. A report prepared for the Maryland Public Service Commission and the National Association of Regulatory Commissioners (NARUC) estimated a VOLL for residential customers experiencing a four-day outage at between $11.00/kWh and $16.73/kWh (2011$).[60]Mark Burlingame and Patty Walton, “A Cost-Benefit Analysis of Various Electric Reliability Improvement Projects from the End Users’ Perspective,” National Association of Regulatory Utility Commissioners, November 2013 (NARUC 2013), table 6.

Damages to commercial and industrial customers depend on the nature of the affected operations. For example, the same NARUC study estimated bottom-up damages of a four-day outage for a large restaurant to be between $27,300 and $36,400.[61]Ibid., table 14. Based on that same study’s estimate of average annual restaurant electricity consumption of 49,000 kWh, the corresponding VOLL is about $51/kWh to $68/kWh.[62]Annual consumption of 49,000 kWh is equivalent to average daily consumption of 134 kWh, or 537 kWh over four days. Given an outage cost of $27,300–$36,400 for a four-day outage, the resulting VOLL is between ($27,300 / 537 kWh) = $50.83/kWh and ($36,400 / 537kWh) = $67.78/kWh.

The Costs of Lost Convenience

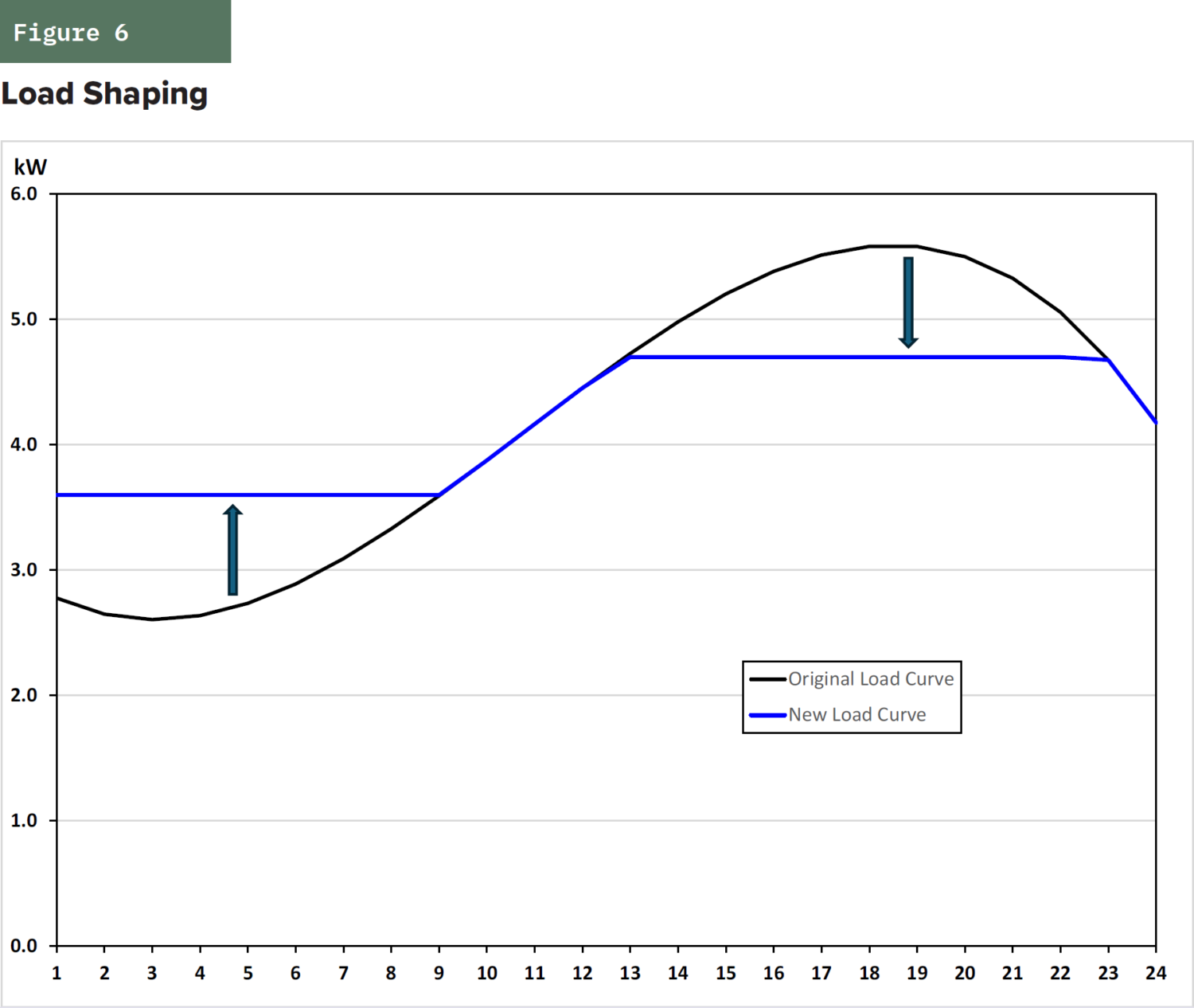

Actual outages are not the only source of lost economic and social value. As discussed previously, an increasingly recommended approach to reducing and deferring the need for additional electric infrastructure investment is to encourage—or force—consumers to shift their electricity consumption from when they would prefer (e.g., charging an EV in the early evening after returning home from work) to times when the overall system demand is lower. In doing so, utilities can reduce peak electricity demand (often called “load shaping”). Because transmission and distribution systems are sized to meet peak demand, load shaping can defer the need for new investments to increase those systems’ capacity and reduce costs (Figure 6). Moreover, load shaping can reduce the need for generating plants, such as combustion turbines, that are designed to operate only when demand peaks.

Although shifting electricity consumption to hours when demand is lower seems like an ideal economic solution because it uses existing capacity more efficiently, the costs to consumers in terms of reduced access are rarely accounted for.

As discussed previously, there are three mechanisms for reducing consumer access to electricity: TOU pricing, direct load controls, and interruptible power contracts. All three options allow utilities to shift or reduce consumption at times of greatest demand and thus delay or avoid entirely investments in additional infrastructure.

Reduced access can be mandatory. For example, utilities may force customers into TOU rate schedules. They may also require customers to install equipment that enables a utility to shut off electricity remotely to end uses that consume significant quantities of electricity (e.g., air conditioners, EV chargers) when demand exceeds available supplies. Some utilities also require large industrial customers to agree to interruptible rates.

In contrast with traditional reliability planning, which focuses on ensuring that there is sufficient infrastructure to meet demand, the primary goal of managing electricity consumption is to defer or avoid the need for new infrastructure investments required to meet increased demand and prevent outages that could occur if the access restrictions were not in place. Consequently, a customer’s VOLL represents an upper-bound estimate of the cost of restricted access. (Otherwise, the customer would prefer a complete outage over restricted access during which some electricity is available.) However, given reported VOLL estimates, the costs of reduced access can still be substantial.

As electrification efforts have increased, so have calls to manage consumers’ increased consumption. The value of deferred infrastructure investment can be thought of as the decrease in the present value cost of that investment. However, optimal deferral estimates exclude the indirect costs borne by consumers from being unable to consume electricity when they want. It may also include direct costs, such as consumers being charged much higher TOU rates to “encourage” them to reduce consumption during peak load periods.[63]The traditional justification for TOU pricing has been to reflect the higher marginal cost of peaking generators. But if, as envisioned, generation is served with a combination of wind, solar, and storage, whose marginal costs are near zero, then that justification no longer applies.

For example, suppose that EV chargers are being installed in a neighborhood of 1,000 homes. One year from now, all 1,000 homes are supposed to have EV chargers. However, the existing local distribution system (poles, wires, and the local substation) can safely support up to 100 homes with EV chargers. (New pole-mounted transformers will need to be installed to serve the homes with EV chargers.) Suppose the cost to upgrade the local distribution system so that all 1,000 homes can install EV chargers will be $100 million; and suppose that delaying the investment reduces the present value cost to

$50 million.

To achieve the delay, the utility installs load-control equipment that limits when the homeowners in the neighborhood can charge their EVs. As more EV chargers are installed, the cost of installing the load-control equipment increases. The optimal deferral time is when the overall present value cost (the distribution system upgrades plus the load controls) is minimized.[64]For a mathematical derivation, see Charles Feinstein and Jonathan Lesser, “Defining Distributed Resource Planning,” Energy Journal 18, no. 1 supp. (June 1997).

Planners often hail this approach as the least-cost one. If the TOU rates, direct load control, and interruptible rates are mandatory, then consumers will be worse off. Moreover, residential consumers are likely to be worse off even if they voluntarily accept either TOU pricing or direct load controls.

Assuming the customer is less willing to change consumption during preferred hours, a load shift will increase the consumer’s overall cost and the consumer will be unambiguously worse off. (This is what happens in most TOU pricing schemes.) Even if the TOU pricing scheme does not change the consumer’s total electricity expenditure—for example, the consumer gets an additional rebate on his bill—the consumer still loses convenience value in excess of the bill savings. Utilities also recover the costs of the necessary equipment—TOU meters and load-control equipment—from customers at the rates they are charged. Hence, customers are not only inconvenienced but must also pay for the equipment used to inconvenience them.

For commercial and industrial customers, the cost of TOU pricing can be estimated based on the increase in costs to produce the same good or service to customers at the same time, the change in cost to produce the same good or service during off-peak hours, or the value of lost output.[65]Technically, the losses would be measured based on the reduction in producer’s surplus. Suppose a restaurant faces higher TOU rates during dinner hours. The restaurant cannot shift consumption (e.g., serving dinner at 2 a.m.), so the cost to serve customers will increase. An industrial customer facing higher TOU prices may shift production to off-peak hours, which may entail hiring additional workers or paying existing workers higher wages to work at night. Alternatively, the industrial customer could install a generator if that results in a lower cost than paying the TOU rates.[66]Involuntary load shedding is clearly different, with costs measured by the industrial customer’s VOLL.

Excluding the costs of customer inconvenience because of diminished access to electricity, especially as electrification policies take effect, represents a major flaw in current utility planning efforts and policymaking. Remedying that flaw would first require detailed studies to determine how different customers value lost convenience and not just lost load. Those studies can be conducted using many of the same methodologies that have been used to evaluate the value of lost load. And the estimated inconvenience values should be incorporated into comparative analyses of infrastructure investment costs versus load-management policies designed to defer such costs.

Conclusions and Recommendations

Electricity is not just another commodity, like wheat or corn. It is an increasingly vital service necessary for modern life. Thus, regardless of the efficacy of federal and state policies forcing additional electrification on the public, those policies must ensure that the infrastructure exists to provide affordable and reliable electricity to consumers when they most value it.

Instead, electric utilities and policymakers are focused on “managing” electricity demand and reducing the need for infrastructure investments, with new pricing structures that penalize consumers for using electricity at times of peak demand and, more recently, by installing load-control devices that allow utilities to remotely limit customers’ electricity consumption. Doing so belies claims that electrification policies will not only benefit the planet by reducing carbon emissions but will benefit consumers.

To address these issues, mandatory electrification efforts should cease. Instead, consumers should determine for themselves whether they wish to purchase an EV, replace an existing gas furnace with an electric heat pump, and so forth.

Second, because electric utilities cannot eliminate electrification mandates (although they can lobby regulators and politicians to do so), existing utility least-cost resource-planning efforts should incorporate consumers’ inconvenience costs directly into infrastructure planning efforts.

Critics may object because of the difficulty of measuring these costs directly and because different individuals and businesses are likely to have very different inconvenience values. Nevertheless, the uncertainty of inconvenience values can be addressed by evaluating the sensitivity of infrastructure investments to different values. For example, if even incorporating a low inconvenience value implies that deferring new infrastructure is no longer the least-cost strategy, then building that infrastructure is warranted. If, instead, deferral is warranted at a middle range of inconvenience values, the utility may wish to gather more detailed information from its customers.

Third, TOU pricing tariffs and direct load controls should be implemented only for those consumers who wish to use them. Moreover, consumers who decide they wish to return to standard tariffs or no longer want their local utility to remotely control certain appliances should be able to do so.

The focus on mandatory electrification is being driven by demands to reduce carbon emissions. But by making reductions in emissions the sine qua non of utility planning, policymakers ignore the increasing value of electricity to society. Carbon emissions can be reduced without sacrificing ample supplies of affordable and reliable electricity. By emphasizing the development of emissions-free nuclear power, especially smaller, modular facilities that can be located near cities and other load centers, rather than wind, solar, and storage resources, emissions can be reduced at a far lower cost.[67]For an empirical analysis of these costs for Oregon and Washington State, see Jonathan Lesser and Mitchell Rolling, “The Crippling Costs of Electrification and Net Zero Energy Policies in the Pacific Northwest,” Discovery Institute, September 2024. This study shows that full electrification and net-zero emissions can be achieved with gas and nuclear power at about one-fifth the cost of a wind-solar-battery-storage strategy, even if costs for the latter fall by half from their present levels. Doing so will reduce emissions and reduce the need for costly high-voltage transmission lines that are needed to bring wind and solar power from rural areas to these load centers.

Appendix: How Retail Electric Utility Rates Are Set

Retail electric utility rates are not set by market forces like the price of milk and eggs. Instead, because providing electric service is best done by one company, rates are set by regulators. [68]A complete discussion of how regulated utility rates are determined is beyond the scope of this report. For a discussion, see Jonathan Lesser and Leonardo Giacchino, Fundamentals of Energy Regulation, 3rd ed. (Public Utilities Reports, Inc., 2019). Some states have introduced retail electric competition, in which customers can select the company from which they purchase electricity. Regardless, the local electric utility delivers the electricity and must serve as a provider of last resort. These rates typically have multiple components, including a fixed, “ready-to- serve” charge that is supposed to cover the nonvarying costs of providing service (e.g., installing and maintaining wires and poles, sending out bills) and a variable cost charge that reflects the electricity generated itself. Large commercial and industrial customers typically are also charged based on their peak electric demand over a month, to capture the fact that electric utilities must build their systems large enough to meet those peak demands. More recently, many customer bills included so-called adders for specific costs, such as fuel, energy conservation programs, other social programs, and environmental costs.

Together, the prices charged for each group of customers are called tariffs. Setting the tariffs begins by establishing a utility’s revenue requirement, which includes its costs to operate and maintain its system, as well as other necessary costs, such as administration, for running its business. After the utility’s overall revenue requirement is established, it is allocated among the different customer groups (e.g., residential, commercial, industrial). Variable costs (e.g., the cost of fuel used in generators) typically are allocated based on each group’s consumption over a historical period. Fixed costs typically are allocated based on each group’s contribution to overall peak demand over that same period.[69]For a discussion of cost allocation methods, see National Association of Regulatory Utility Commissioners, Electric Utility Cost Allocation Manual, January 1992.

After the costs have been allocated, the tariffs are designed so that the utility recovers those costs based on its forecast of future consumption and peak demand.

Finally, electric utilities cannot simply raise prices when demand is greatest, as airlines and ride-share companies do.[70]This is sometimes called “surge pricing,” which may violate U.S. antitrust laws; see Baker Botts, “U.S. Antitrust Law and Algorithmic Pricing,” June 26, 2023. Hence, a utility that wishes to introduce time-of-use (TOU) pricing (discussed elsewhere in this paper) must offset higher prices charged when electricity demand is greatest, with lower prices when demand is lower. However, actual applications of TOU pricing show that many consumers end up with higher electric bills.